11-多模态-BLIP #220

Labels

No Label

bug

duplicate

enhancement

help wanted

invalid

question

wontfix

No Milestone

No project

No Assignees

1 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: HswOAuth/llm_course#220

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

基础环境:

NVIDIA GeForce RTX 3080(10240MiB)

step5 任务中使用显存1260MiB

step6 任务中使用显存1888MiB

step1: 下载源码

step2: 配置环境

step3: 下载模型

step4: 修改模型文件的路径

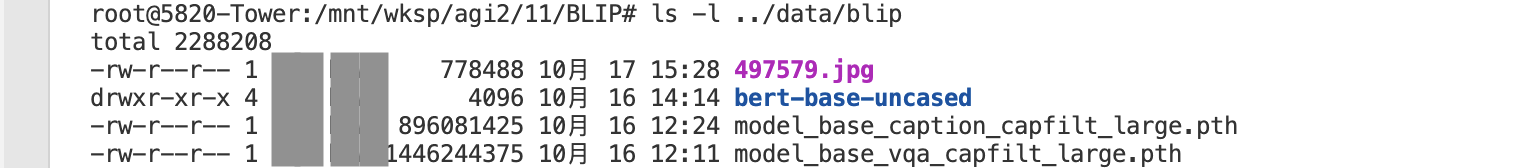

模型存放的路径

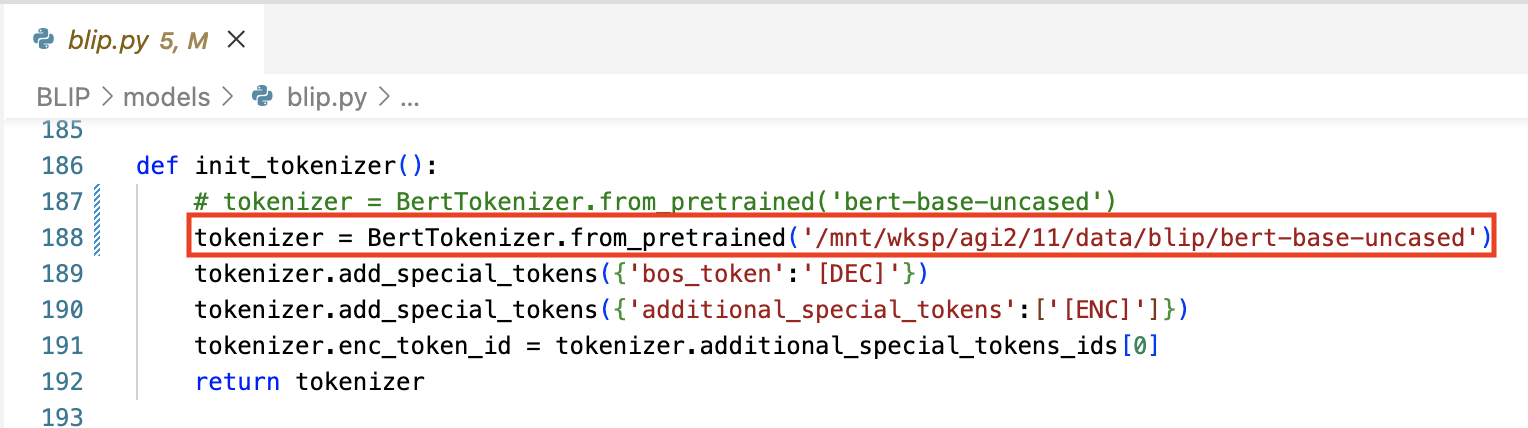

因未将bert-base-uncased模型放在BLIP目录下,在BLIP/models/blip.py文件中修改了模型加载的路径

step5: 使⽤微调的 BLIP 模型(Image Captioning模型)执⾏图像的Image Captioning⽣成

step6: 模型执⾏VQA任务