04-Chinese LLaMA Alpaca系列模型OpenAI API调用实现(跟练)-部署本地“chatgpt” #24

Labels

No Label

bug

duplicate

enhancement

help wanted

invalid

question

wontfix

No Milestone

No project

No Assignees

1 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: HswOAuth/llm_course#24

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

服务端

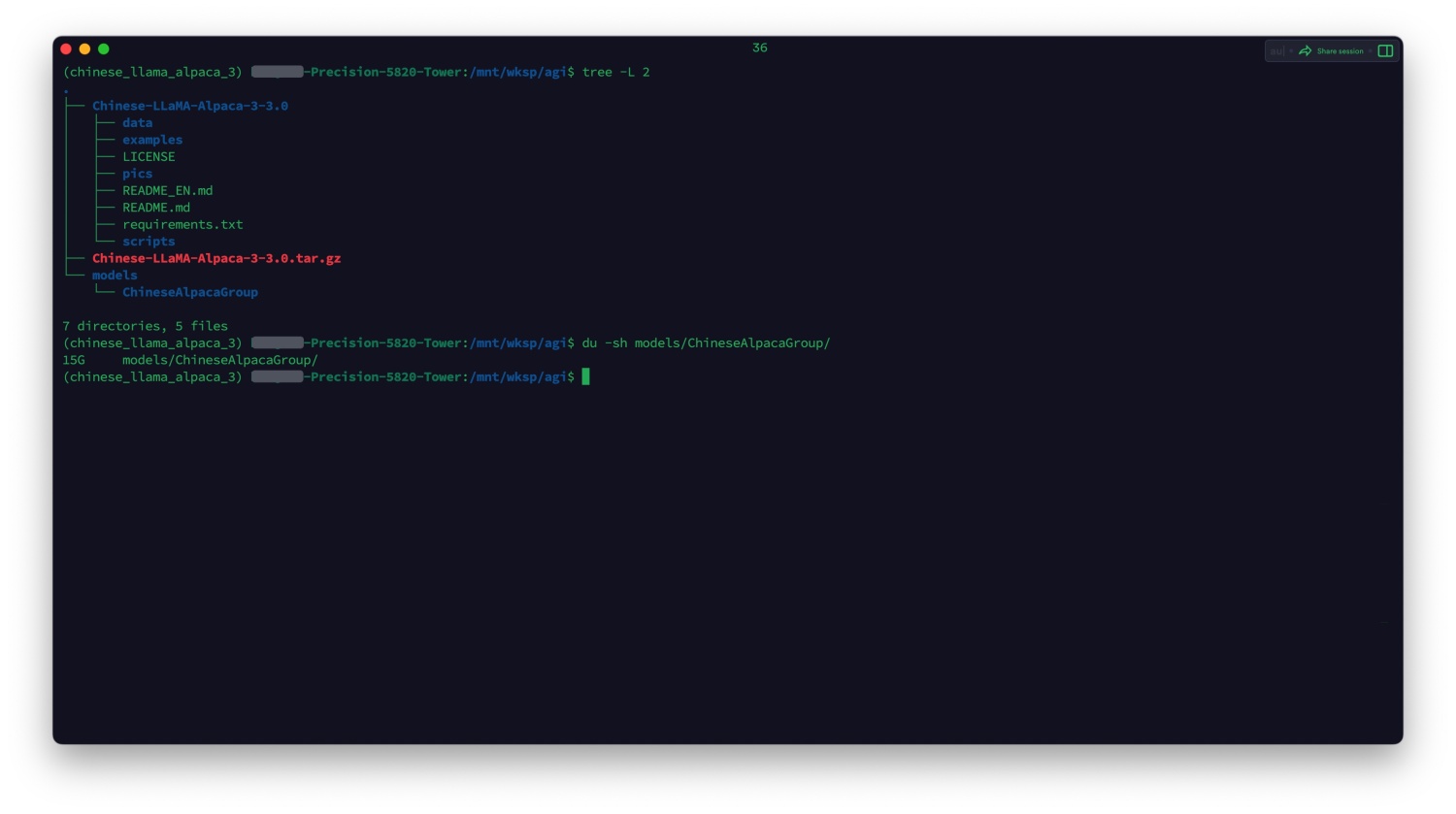

step1: 下载源码

wget https://file.huishiwei.top/Chinese-LLaMA-Alpaca-3-3.0.tar.gz

tar -xvf Chinese-LLaMA-Alpaca-3-3.0.tar.gz

setp2: 模型下载

pip install modelscope -i https://mirrors.aliyun.com/pypi/simple

modelscope download --model ChineseAlpacaGroup/llama-3-chinese-8b-instruct-v3 --cache_dir /mnt/wksp/agi/models

step3: 创建虚拟环境

conda create -n chinese_llama_alpaca_3 python=3.8.17 pip -y

conda activate chinese_llama_alpaca_3

cd Chinese-LLaMA-Alpaca-3-3.0/scripts/oai_api_demo/

pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple

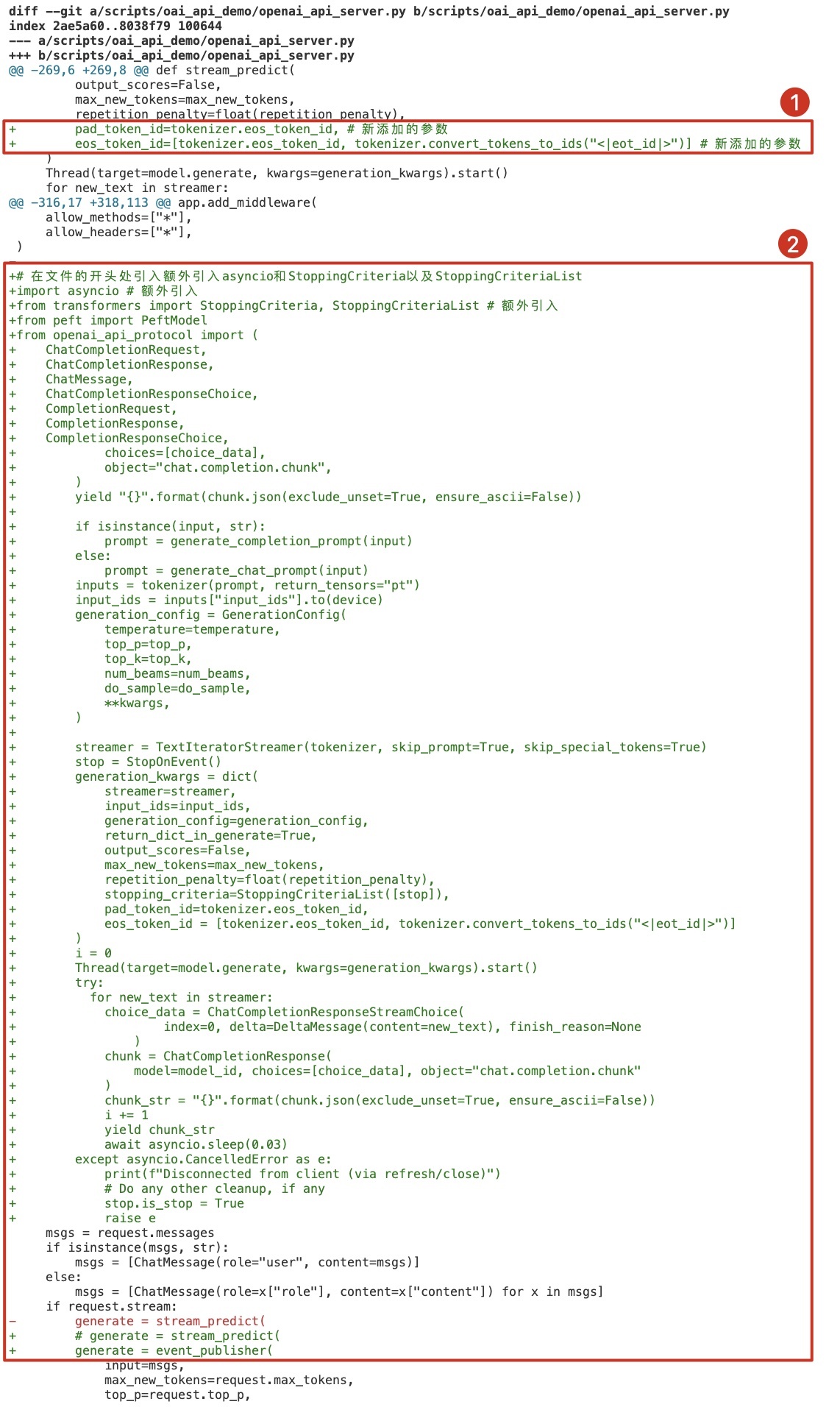

step4: 修改文件

Chinese-LLaMA-Alpaca-3-3.0/scripts/oai_api_demo/openai_api_server.py

①处修改:增加停止token,防止回复重复内容(兼容llama3特有的停止token,不然流式接口返回的内容会不断的自动重复,不停止)

②处修改:在用户终止回复时,释放资源(在修改前,如果项目的使用场景是生成长文本,即使用户提前终止大模型回复,脚本依然会占用GPU资源直到整个结果完全生成)

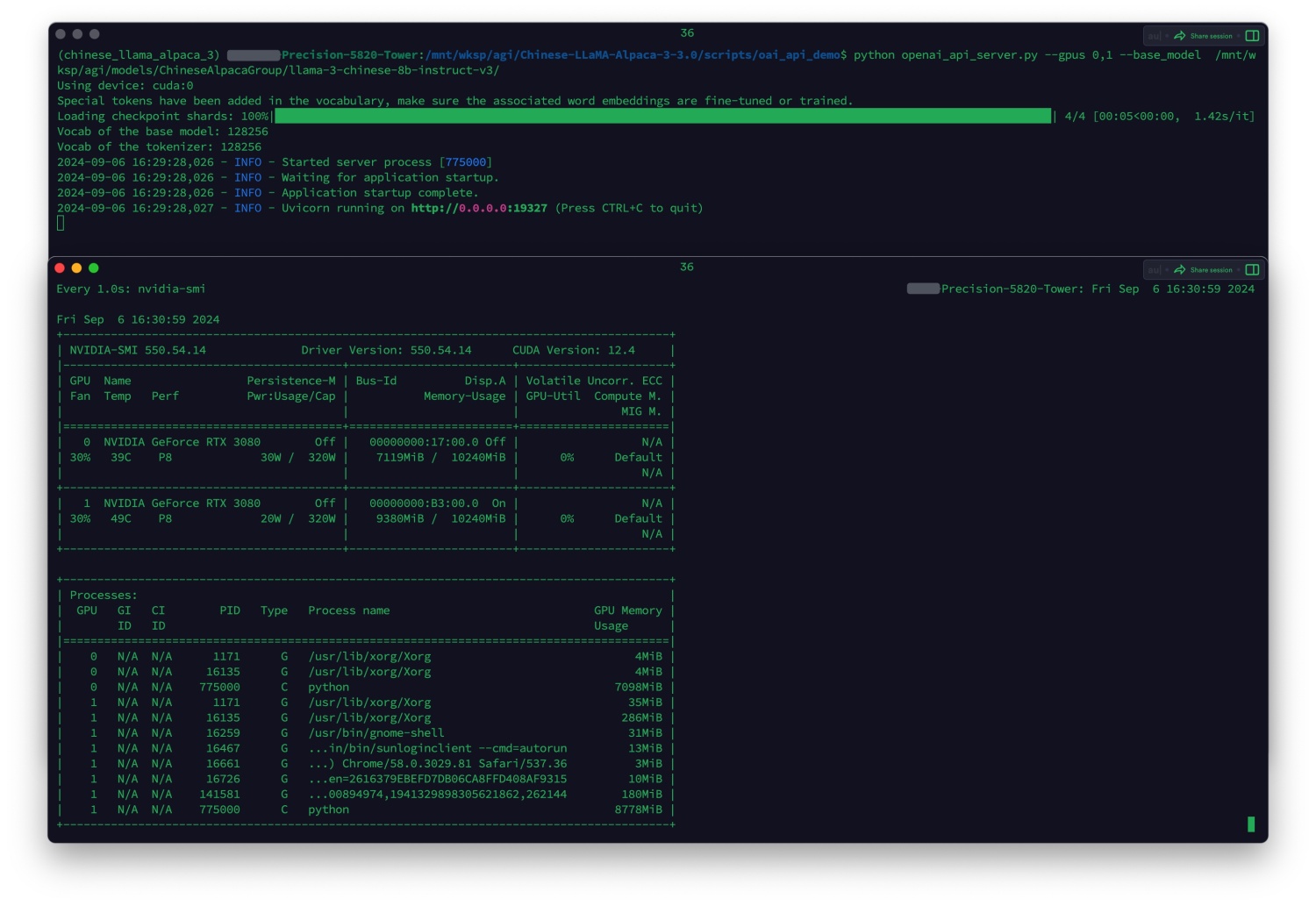

step5: 启动服务

cd Chinese-LLaMA-Alpaca-3-3.0/scripts/oai_api_demo/

python openai_api_server.py --gpus 0, 1 --base_model /mnt/wksp/agi/models/ChineseAlpacaGroup/llama-3-chinese-8b-instruct-v3/

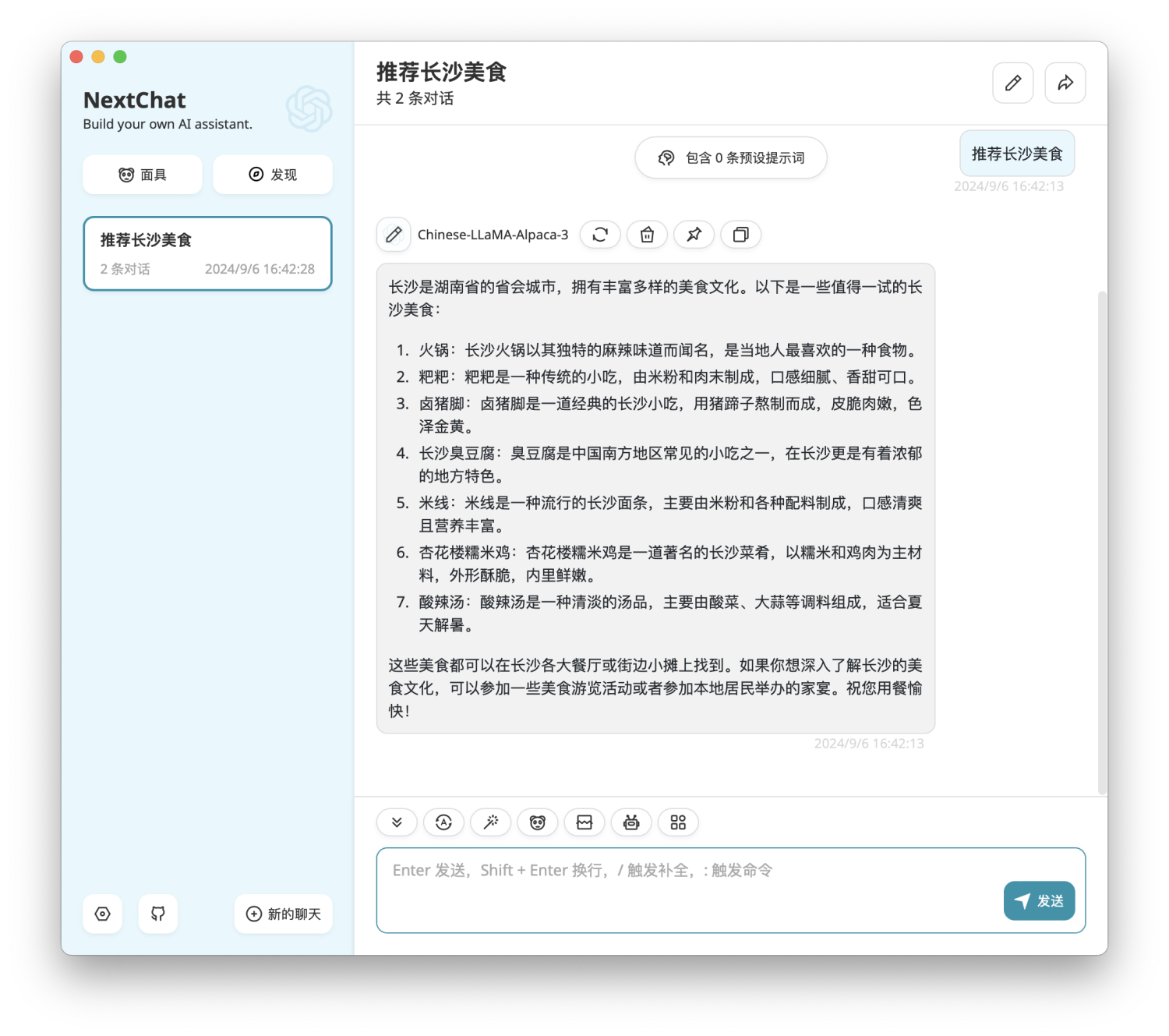

客户端

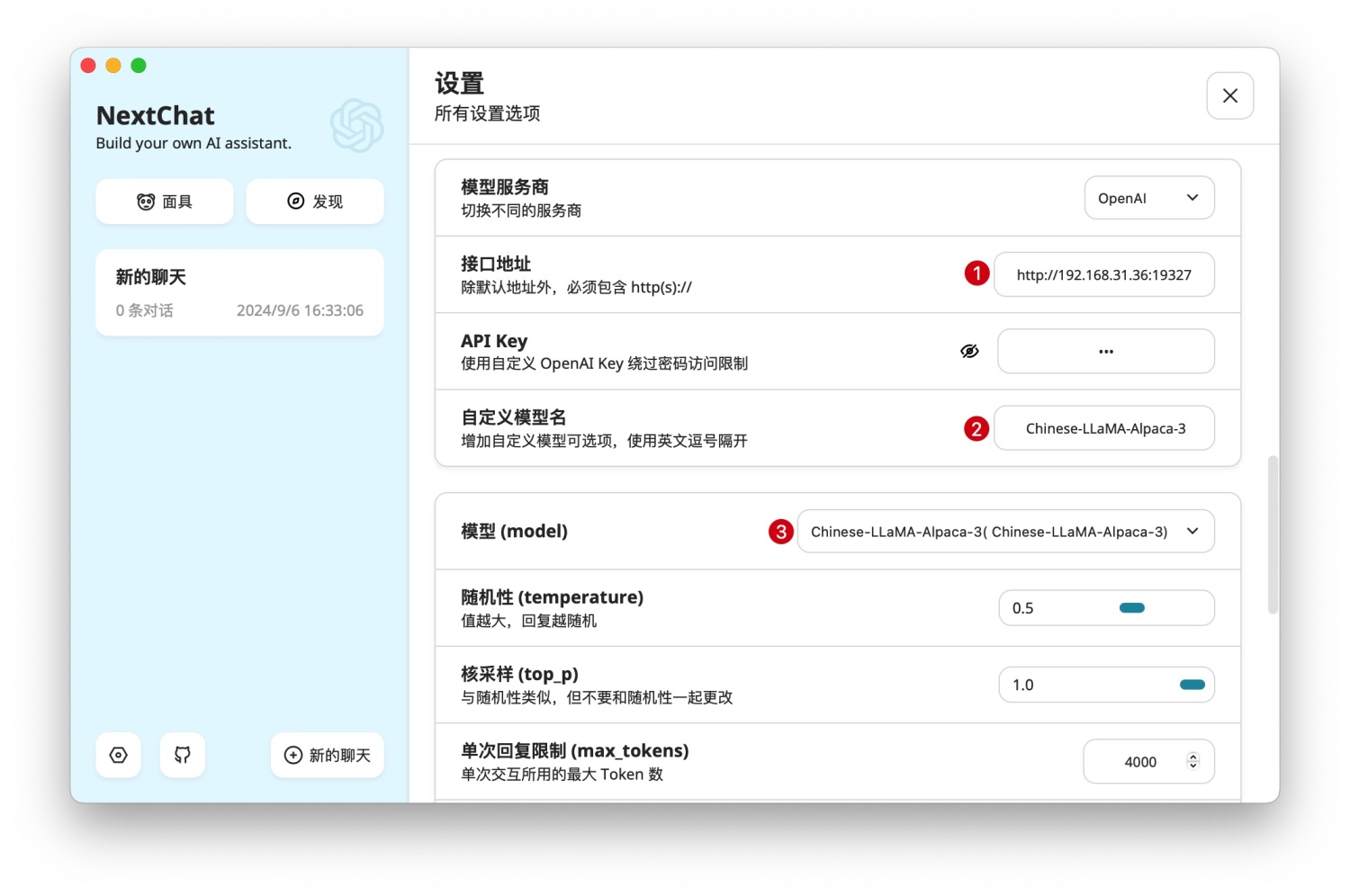

step1: 客户端配置

①输入启动服务的接口地址

②自定义本地模型名称

③选择模型

step2: 用部署的本地模型进行问答

Chinese LLaMA Alpaca系列模型OpenAI API调用实现(跟练)-部署本地“chatgpt”to 04-Chinese LLaMA Alpaca系列模型OpenAI API调用实现(跟练)-部署本地“chatgpt”